Handwritten Digit Recognition

Description

This application demonstrates handwritten digit recognition using the MNIST dataset and a neural network, offering a comprehensive exploration of deep learning workflows. It guides users through every stage of the process, including dataset preparation, where raw data is cleaned and structured; model training, where the neural network learns to identify patterns in handwritten digits; evaluation, where the model's accuracy and performance are assessed; and real-time predictions, showcasing the practical application of the trained model to recognize and classify new digit inputs

Dataset

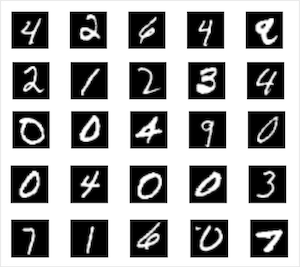

The MNIST dataset is a benchmark in the field of machine learning and computer vision. It consists of grayscale images of handwritten digits (0-9) with dimensions of 28x28 pixels. This section provides an interactive way to load the dataset, preview sample images, and dynamically reduce the dataset size to experiment with model training and testing scenarios. The dataset is divided into 55,000 training samples and 10,000 test samples, allowing for robust model evaluation and experimentation.

- Training Samples: 55,000

- Test Samples: 10,000

- Image Dimensions: 28x28 pixels

- Number of Classes: 10 (Digits 0–9)

Adjust Dataset Size

You can reduce the size of the training dataset by selecting a percentage below. This is useful if you want to experiment with a smaller dataset, speed up training, or test models quickly, though it may result in reduced accuracy.

Updated Dataset Details

After applying a reduction, the dataset size will be updated as shown below:

- Training Samples: N/A

- Test Samples: N/A

Model

The Convolutional Neural Network (CNN) architecture recommended for training MNIST data is specifically designed to efficiently process and classify the handwritten digit images. It leverages convolutional layers to extract spatial features, pooling layers to reduce dimensionality while retaining important information, and dense layers for final classification. This architecture is lightweight yet powerful, making it well-suited for training on the MNIST dataset, which consists of 28x28 grayscale images of digits from 0 to 9. Below is the detailed breakdown of each layer in the model.

| Layer Type | Details |

|---|---|

| Input | Shape: [28, 28, 1] |

| Conv2D | Filters: 32, Kernel Size: 3x3, Activation: ReLU |

| MaxPooling2D | Pool Size: 2x2 |

| Conv2D | Filters: 64, Kernel Size: 3x3, Activation: ReLU |

| MaxPooling2D | Pool Size: 2x2 |

| Flatten | Flatten the input |

| Dense | Units: 128, Activation: ReLU |

| Dense | Units: 10, Activation: Softmax |

Training

The training section allows you to train the Convolutional Neural Network (CNN) on the MNIST dataset. Using the Adam optimizer, categorical cross-entropy as the loss function, and accuracy as the evaluation metric, you can experiment with different configurations of epochs to observe the model's learning progress. Adjust the maximum number of epochs to control the duration and depth of the training process.

Compiler:

- optimizer: adam

- loss: categoricalCrossentropy

- metrics: accuracy

Epoch #: 0 | Loss: N/A

Model Evaluation

The model evaluation section allows you to assess the performance of the trained model. By evaluating the model, you can analyze its accuracy on a class-by-class basis and overall. Use the button below to calculate and display the accuracy metrics for each class, along with the total model accuracy.

Class Accuracy

| Class | Accuracy | # Samples |

|---|

Model Accuracy: N/A

Prediction

In this section, you can draw a digit on the canvas, and the trained model will predict the digit based on your input. Use the "Predict" button to see the prediction, and the "Clear" button to reset the canvas for a new attempt. The predicted digit will be displayed below the buttons.

Predicted Digit: N/A